| Three Effect Demonstrations (Win32) | 2006-7-19, ZIP File 396kB |

This page discusses a few techniques I have experimented with to produce interesting effects in OpenGL that run at realtime speeds on a modest graphics processor. I have not written a tutorial for each of these techniques, but I will describe them at a high level below. You can download and play with the compiled demos. Unfortunately source code has been lost, and can't be provided.

The three effects are a blurring technique using texture maps, refraction using a cube map, and shadow volumes implemented as a vertex shader.

| Three Effect Demonstrations (Win32) | 2006-7-19, ZIP File 396kB |

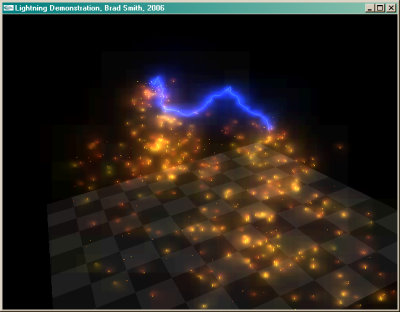

This I wrote after thinking about bloom filter effects and how to simulate them. What I did was render to texture all of the bright objects in the scene (rendering the other objects black for occlusion), then use interpolated textures with alpha blending to generate a new texture that is a blurred version of the original. There are many ways to accomplish a blur; there are convolution extensions (which are usually slow), or you can do the convolution yourself by overlaying several of the same textures with varying weights, but this is also slow for large blurs. My solution was something like a mip-map: render the scene at 256x256 as stated above, then copy that to the texture. Next, render that texture at half dimensions and copy the result to another texture, repeat for about five more layers. Once generated, all of these layers can be stretched back to the full 256x256 size and blended on top of eachother. The result is a pretty good aproximation of a gaussian blur, and it runs quickly. Once this texture is generated it can be blended on top of your final rendered scene.

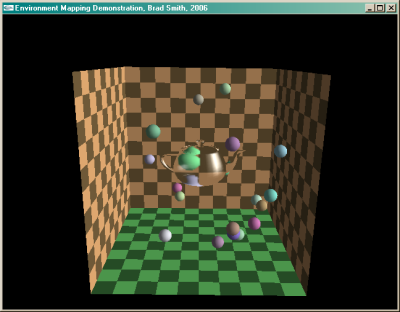

This was an attempt to simulate refraction with an environment map. The environment map here is rendered to a cube map texture by setting the camera with 90 degree angle of view looking out in six directions from the centre of the object. From this cube map, I make the assumption that all objects in this view are one particular distance away, thus pretending the view created is the inside of a sphere (this makes calculations easier, but causes objects to look either too big or too small depending on how far they are from that imaginary sphere surface). To use this map for refraction, I first figure out where a ray from the eye to a particular vertex intersects this imaginary sphere (this is done in a vertex shader). The vector from the centre to that intersection represens a ray of light that went straight through the object (no refraction). To simulate the bending of light, I take the normal at that vertex to be pointing away from the direction light would bend under total refraction. Instead of doing an accurate calculation of angles using quaternions to apply Snell's Law in 3D, I simply interpolate between the two vectors by a specified factor. (This produces a very good result with far less calculation, and since we are already working with an approximation as a result of the assumed shape of the environment map, it is quite reasonable.)

On top of the cube map lookups, extra tangibility is achieved by adding specular reflection (see Phong's reflection model), not only of the outside surface, but the inner surfaces as well. Technically the inner surface refelctions should appear stretched under the refraction, but this would be very difficult to implement in realtime.

The calculation of a direction vector to pass to the cube map is done per vertex, and is interpolated across the fragment shader. As a result, the fragment shader does very little work, and the program runs efficiently. (The provided demonstration also has a reflection mapping mode which simulates a mirrored surface.)

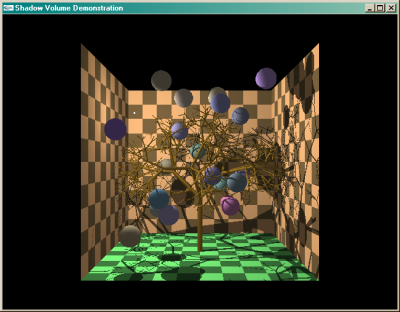

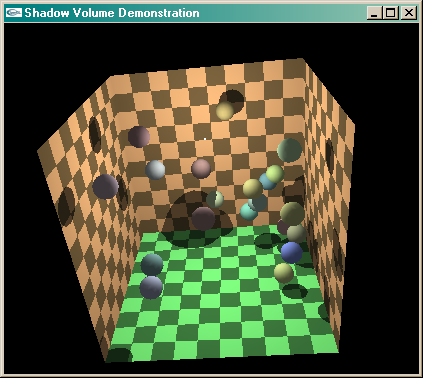

Shadows are an interesting problem. There are many ways to draw them, but a lot of them end up being either too slow or too inaccurate. The most effective solution may be what is known as shadow volumes. (There are very good articles on shadow volumes at gamedev and gamasutra, for further reading.) A shadow volume is essentially the three dimensional volume that is shadowed by any particular object. If part of an object we are drawing exists inside such a volume, it should appear in shadow.

There are two main problems with shadow volumes; the first has to do with the fact that OpenGL renders surfaces. How, using surfaces, are we to figure out whether an object exists in shadow? The solution is fairly straightforward: count the number of times a ray from the eye would enter a front surface of a volume before hitting an object, and then count the number of times it would leave a back surface of a volume. If these counts are equal, the object is not in shadow and should be drawn normally. This is accomplished using two rendering passes (one for shadowed surfaces, one for lit surfaces), a depth buffer (to figure out what's in front of the objects), and a stencil buffer (to count the shadow volume surfaces). There are more details, but the implementation is adequately described by the gamasutra article linked above, so I won't go into them here. My particular implementation actually counts shadow surfaces behind the object rather than in front (known sometimes as Carmack's Reverse), but it has the same result.

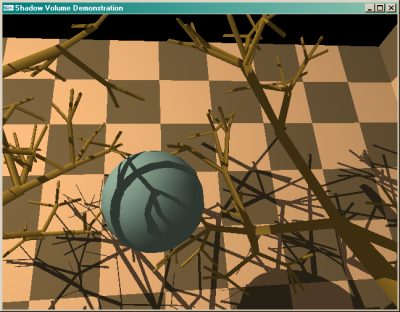

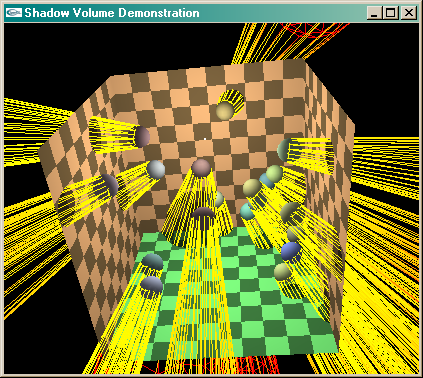

The second problem is figuring out the shape of the shadow volumes. This can be done in software by figuring out which faces of a shape are facing the light, and which are not. The egde between them is the silhouette edge, which must now connect to a second set of points that is the silhouette edge projected to infinity (in practice either the near or far clipping plane). Finding this edge can be computationally expensive, but there is a way to quickly approximate the shape of a shadow volume using the vertex shader. Simply using the normal at any particular vertex we can determine if that vertex faces the light, and if it does not, project it onto the near or far clipping plane. If this is done properly, the volumes are also properly capped, solving problems that would otherwise occur if the camera was withing a shadow volume.

In the images above, you can see a complicated scene with shadows from up close, and from further away. This rendered at a very reasonable speed on my hardware, despite the complexity. Also, you can see that the low-polygon tree branches still cast very good shadows despite my approximation of their volume shapes. The speed of my implementation depends mostly on fill time, that is the size of the (invisible) shadow volume polygons. They can be quite large, especially when the light is between an object and the viewer. The approximation, interestingly, doesn't add any additional filling that a more accurate volume calculation would give, but has extra vertices (the cost of which is negligible in comparison to the fill time).

In the images above, first you can see a scene with a point surrounded by spheres which are casting shadows. In the second image you can see wireframe visualizations of the surface of the shadow volumes they are casting. Any surfaces that are within a shadow volume appear in shade.

Please note: the shadow demo was developed on a Hercules 3D Prophet 4500 Kyro II graphics card, and may not function correctly on other hardware. Unfortunately, since 2006 I have lost its source code, and cannot improve it to be more robust across different graphics cards.